[2005.14165] Language Models are Few-Shot Learners - arXiv.org

May 28, 2020 · Here we show that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art …

We demonstrate that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even becoming competitive with prior state-of- the-art fine-tuning …

A Practical Survey on Zero-shot Prompt Design for In-context Learning

This paper presents a comprehensive review of in-context learning techniques, focusing on different types of prompts, including discrete, continuous, few-shot, and zero-shot, and their …

Following the original paper of ICL (Brown et al., 2020), we provide a definition of in-context learn-ing: In-context learning is a paradigm that allows language models to learn tasks given only a …

In-Context Principle Learning from Mistakes - arXiv.org

In-context learning (ICL, also known as few-shot prompting) has been the standard method of adapting LLMs to downstream tasks, by learning from a few input-output examples. …

More Samples or More Prompts? Exploring Effective Few-Shot In-Context ...

Apr 2, 2024 · In this work, we propose In-Context Sampling (ICS), a low-resource LLM prompting technique to produce confident predictions by optimizing the construction of multiple ICL …

atural Language Processing(NLP) tasks. This paper presents a comprehensive review of in-context learn-ing techniques, focusing on different types of prompts, including discrete, …

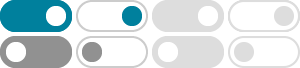

In-context learning (ICL): The simplest method is to leverage in-context learning, in which LLMs are prompted with instructions or demonstrations to solve a new task without any additional …

Fine-Tuning, Prompting, In-Context Learning and Instruction …

Feb 20, 2024 · In this work, we aim to investigate how many labelled samples are required for the specialised models to achieve this superior performance, while taking the results variance into …

One of the most remarkable behaviors observed in LLMs is in-context learning (ICL) (Brown et al., 2020), which provides LLMs with human-written instruc-tion and a few exemplars or …

Abstract The recent GPT-3 model (Brown et al., 2020) achieves remarkable few-shot perfor-mance solely by leveraging a natural-language prompt and a few task demonstrations as in …

1 Introduction Large language models (LMs) have shown impres- sive performance on downstream tasks by simply conditioning on a few input-label pairs (demonstra- tions); this …